Theory 1 - Minimum mean square error

Suppose our problem is to estimate or guess or predict the value of a random variable

There is no single best answer to this question. The “best guess” number depends on additional factors in the problem context.

One method is to pick a value where the PMF or PDF of

Another method is to pick the expected value

For the normal distribution, or any symmetrical distribution, these are the same value. For most distributions, though, they are not the same.

Mean square error (MSE)

Given some estimate

for a random variable , the mean square error (MSE) of is:

The MSE quantifies the typical (square of the) error. Error here means the difference between the true value

Other error estimates are reasonable and useful in niche contexts. For example,

In problem contexts where large errors are more costly than small errors (i.e. many real problems), the most likely value of

It turns out that the expected value

Expected value minimizes MSE

Given a random variable

, its expected value is the estimate of with minimal mean square error. The MSE error for

is:

Proof that

minimizes MSE Expand the MSE error:

Now minimize this parabola. Differentiate:

Find zeros:

When the estimate

In the presence of additional information, for example that event

The MSE estimate can also be conditioned on another variable, say

Minimal MSE of

given The minimal MSE estimate of

given another variable : The error of this estimate is

, which equals .

Notice that the minimal MSE of

This is a derived variable from

The variable

Theory 2 - Line of minimal MSE

Linear approximation is very common in applied math.

One could consider the linearization of

One could instead minimize the MSE over all linear functions of

The difference here is:

- line of best fit at a single point vs.

- line of best fit over the whole range of

and —weighted by likelihoods

Linear estimator: Line of minimal MSE

Let

be an arbitrary line . Let . The mean square error (MSE) of this line

is: The linear estimator of

in terms of is the line with minimal MSE, and it is: The error value at the (best) linear estimator,

, is: Theorem: The error variable of the linear estimator,

, is perfectly uncorrelated with .

Slope and

Notice:

Thus, for standardized variables

and , it turns out is the slope of the linear estimator.

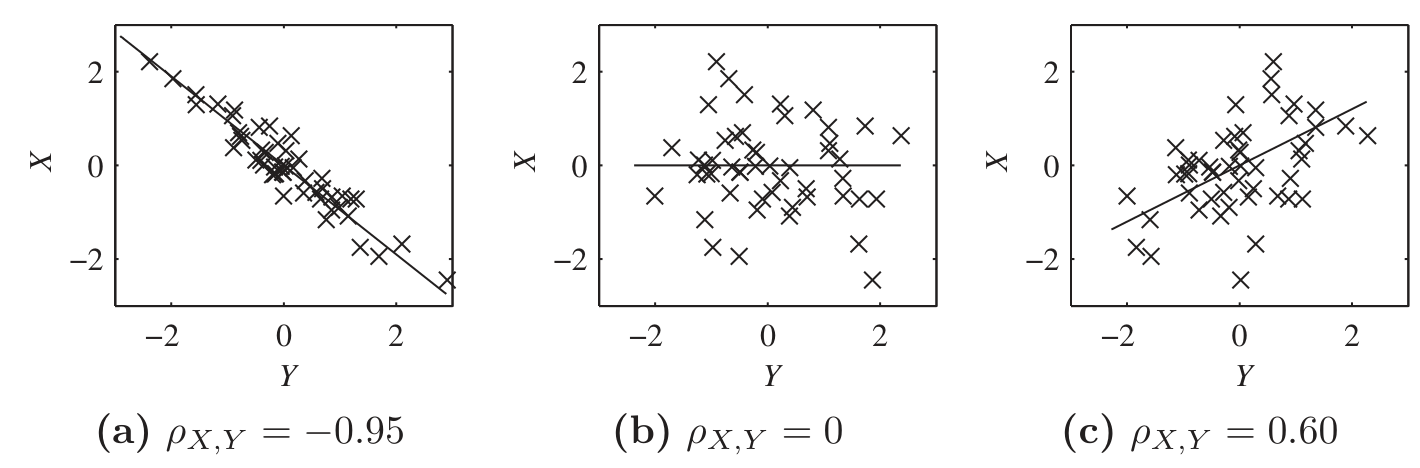

In each graph,

The line of minimal MSE is the “best fit” line,